DIY or read through; AWS does not solve this to date and are actually referring to the code shared below when being asked by clients.

ECS provides the freedom of scaling different services through adding “tasks”. These operate and communicate independently, and are being hosted on a series of EC2 instances. Scaling the system’s services in and out, as well as scaling out EC2 hosts is a convenient task, achieved with the help of CloudWatch metrics and alarms. However, scaling-in EC2 hosts requires some complexity in decision making.

ECS is a three-layer architecture:

-

Cluster: a set of applications running on a shared group of resources

-

Service: a logical instance (e.g “application”)

-

Task: a physical instance of a service

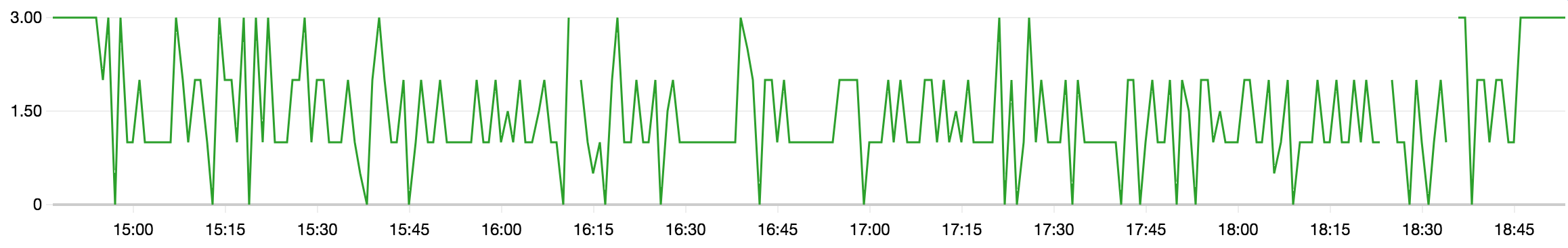

Scaling tasks in and out is relatively simple; based on a couple of metric thresholds such as cpu load percentage which determines whether new tasks should be loaded, or current ones should be removed. Scaling ECS hosts out, is about the same; for example when a certain level of CPU is crossed, or when the physical cluster doesn’t have the required amount of memory to hold the current load, new EC2 instances are triggered.

Removing resources is about cost reduction; these are the resources that are being paid for, and removing them when they are no longer required is about utilization which is translated to costs. However, the scale-in task is somewhat challenging: applying the general method of crossing a metric threshold might result in cutting down required resources in other parameters, for example: removing resources due to lower levels of CPU while the level of memory reservation cannot “afford” to lose hosts. Since AWS doesn’t provide the function of multiple-metric scale, and due to the fact that such a scale requires a few more calculations to make the decision of resource removal, an external tools is required for the task.

The problem

As said, scaling-in is not a straight forward task: assuming a host cluster was scaled up based on low memory available, but then scaled down to to low CPU levels, removing instances may result again in low memory avail able which will trigger new hosts again. This can be a never-ending scaling loop.

Never ending loop — # of container instances scale up and down repeatedly as each scale triggers the opposite action

Never ending loop — # of container instances scale up and down repeatedly as each scale triggers the opposite action

A multi-metric scale trigger is required. Ignoring for a moment the fact that AWS doesn’t offer such a feature, what if a multi metric trigger is used but the future metrics levels’ trigger scale up again, this is another loop. So, forecast metric calculation is also required. There’s also the need to decide which is the least utilized instance to scale, then making this a graceful removal draining client connections before termination, together with a cleanup of 0% utilized resources.

https://github.com/omerxx/ecscale

ECSCALE answers all of the above. It is ready to run on AWS Lambda, which usually means this is a completely free resource, providing a virtual butler that cleans up the mess of unused instances.

The tool is ready to be deployed as a server-less function running on AWS Lambda. As such, it only requires ~3000 Lambda seconds every month. Since every AWS account is entitled to 1,000,000 free seconds every month, unless you’re running other server-less applications on Lambda, the resource is in fact completely free of charge. The scaling process is running every 60 minutes (configurable, one hour is default as EC2 instances are already paid for a whole hour even if terminated before), iterating over an account’s ECS resources and cleaning them up.

Edit: AWS have recently announced a new EC2 billing model which charges and instance by the second. This means that when an instance is terminated, it’s billed for the part of the hour it was running and no a whole hour. Therefor, It is recommended the the scaling tool is run more frequently; since API calls are made, and the system should be given time to make unrelated changes such as deployments, scaling services and others, 1 second or even 1 minute are not ideal. After running some tests it seems that the sweet-spot is around the 20 minutes trigger. You should however test this in your own cluster and make sure current procedures are not affected by the new frequent changes. Best of luck!

How does it work?

-

The service iterates over existing ECS clusters

-

Checking a cluster’s ability to scale-in based on predicted memory reservation capacity

-

Look for empty hosts running no tasks that can be scaled

-

Look for the least utilized scale candidate

-

Move the candidate to draining state; draining service connections gracefully

-

Terminate a draining host that has no running tasks and decrease the desired number of instances

Getting it started

-

Create a Lambda function with a Maximum 5 seconds runtime using the provided role policy

-

Copy ecscale.py code to Lambda

-

Create a trigger for running every hour

All set, your resources are automatically taken cared of.

Contributions, questions, suggestions:

-

github.com/omerxx

-

omer@devops.co.il